What Is AWS Fargate & How Does It Work? Serverless Compute Engine Explained

What is AWS Fargate, and how does it work? Amazon Web Services (AWS) Fargate is a serverless container solution that offers a powerful way to run containers without managing servers. Keep reading to learn how it works, understand its benefits and limitations, and see how it compares to other AWS services.

Businesses are embracing containerized applications to improve flexibility, speed up deployment and scale on demand. However, managing containers at scale poses challenges, especially when it comes to provisioning compute resources, handling updates and managing infrastructure. AWS Fargate offers a powerful yet simple solution within the Amazon Web Services (AWS) ecosystem.

AWS Fargate allows you to run containers without worrying about server management. As a serverless compute engine for containers, it eliminates the need to provision, scale and maintain container servers. This guide offers a deep dive into Fargate, exploring its functionality, benefits and limitations while comparing it to other cloud computing services in the AWS ecosystem.

What Is AWS Fargate?

AWS Fargate is a serverless compute engine that lets users run containers without managing servers or infrastructure. It integrates with Elastic Container Service (ECS) and Elastic Kubernetes Service (EKS), allowing developers and operations teams to deploy containerized applications to avoid the complexity of provisioning, scaling and maintaining virtual machines.

without managing servers.

Instead of manually provisioning EC2 instances, Fargate abstracts the underlying infrastructure. Developers define the application’s needs using tasks. They specify the CPU, memory, network and storage requirements, and Fargate automatically provisions your resources.

This means no more patching servers, configuring instance types or fine-tuning resource utilization. Users can focus on the code while AWS handles the rest.

When a Fargate task is launched, AWS provisions all the required compute resources and isolates the environment for better security and performance. Fargate automatically adjusts capacity to match demand, ensuring cost efficiency without sacrificing performance.

Since Fargate is a serverless solution, users don’t pay for idle resources. They are charged only for the vCPU and memory that they actually use.

Key Components of AWS Fargate

AWS Fargate simplifies container deployment by handling the infrastructure management. Understanding its core components will help you design better solutions. The components are building blocks that work together to run containers efficiently while abstracting servers. Let’s have a look at each component and see how they function in real-life scenarios.

AWS Fargate Key Features

AWS Fargate offers a powerful set of features designed to simplify container deployment while maintaining performance, security and scalability. These features let developers focus on building applications rather than managing infrastructure. Here are some of the most valuable features that make Fargate a top choice for running containerized workloads in the cloud.

Step by Step: How Does AWS Fargate Work?

AWS Fargate helps you run containers without having to manage the underlying infrastructure. It streamlines the entire container lifecycle so that teams can focus on building applications instead of provisioning servers. Here is a step-by-step walkthrough of how Fargate works.

1. Create a Task Definition

A task definition is a JSON file that acts as a blueprint for your containerized workload. It specifies requirements like memory and CPU requirements, container images, entry point commands, port mappings, environment variables and logging configurations. Users can also define volume mounts and specify how many containers to run within the same task.

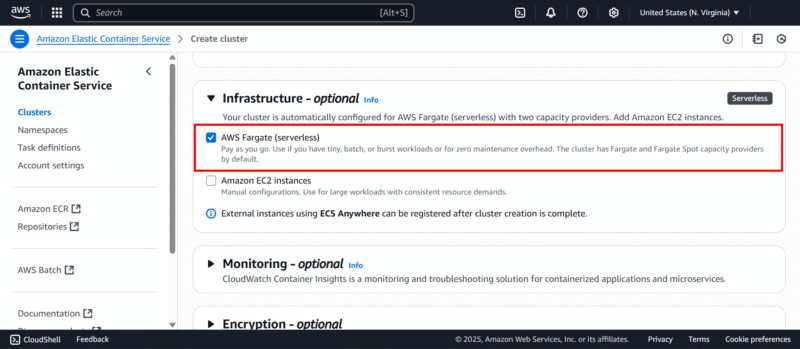

2. Register the Task in ECS or EKS

Register your new task definition with Amazon ECS or EKS, depending on your container orchestration choice. ECS is ideal for simple container setups, while EKS is suited for users who are familiar with Kubernetes.

This step integrates container orchestration into the workflow, letting the control plane determine where and when to place each Fargate task across the available compute resources.

3. Configure Network and Security

Define how your Fargate tasks connect to the rest of your infrastructure. Fargate runs within an Amazon VPC, so you must select subnets (private or public), assign security groups and define NACLs to manage inbound and outbound traffic. This ensures that tasks are deployed with proper isolation and networking routing.

Each task gets its own Elastic Network Interface (ENI), which lets users enforce granular policies.

4. Assign IAM Roles for Access Control

You can assign IAM roles to Fargate tasks for secure AWS service interactions. The “execution” role allows Fargate to pull container images and write logs to CloudWatch, while the “task” role controls what AWS resources your container can access. This ensures robust access management, adhering to the principle of least privilege.

5. Choose Logging and Monitoring Options

AWS Fargate natively integrates with Amazon CloudWatch Container Insights, helping users collect logs, performance metrics and error reports automatically. You can also configure additional logging drivers, such as FireLens, for advanced use cases.

Monitoring is essential to track CPU and memory usage, network I/O and container health, allowing teams to act on real-time insights and optimize costs.

6. Launch the Fargate Task or Service

Launch your container as a standalone Fargate task or a persistent service. Tasks are great for batch or short-lived jobs, while services can keep an application running long-term. Fargate abstracts server management and handles the provisioning of scalable compute capacity, networking and container runtime setup.

7. Configure Elastic Load Balancing

If your Fargate tasks serve external users or APIs, you can configure elastic load balancing to distribute traffic evenly across multiple instances. Fargate supports integration with ALBs and NLBs to improve availability and fault tolerance. You can define routing rules, SSL termination and health checks through the load balancer.

8. Configure Automatic Scaling

Fargate automatically adjusts the number of running tasks based on usage metrics or CloudWatch alarms. The scaling can be horizontal — such as adding more tasks — or vertical, where the CPU and memory resources are adjusted for each task. Automatic scaling ensures sufficient capacity during high traffic and lower costs during quiet periods.

9. Run and Monitor the Application

Monitor your containerized applications in real time once you deploy them. You can view logs, metrics and alerts through AWS CloudWatch, ECS and third-party tools. You can also inspect each task definition, track container health and set up notifications for performance issues or crashes.

10. Terminate or Update Tasks as Needed

You can stop tasks manually or automate their termination based on logic or time schedules. You can also update services with new container versions using rolling deployments. This makes your environment agile, safe and responsive to change.

Fargate supports zero-downtime updates and makes new containers pass health checks before they go live.

AWS Fargate vs Other AWS Services

AWS Fargate is often compared with other compute services, such as EC2, ECS, EKS and Lambda. Each service offers different trade-offs in terms of container management, operational overhead, control, scalability and ease of use.

How do you choose between AWS Fargate and these alternatives? The key lies in understanding Fargate’s functionality, the nature of your workload and your desired level of serverless computing. Let’s break down these differences in detail.

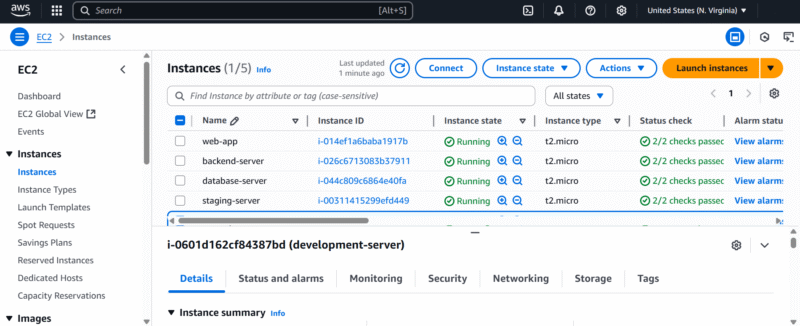

AWS Fargate vs Amazon EC2 (Elastic Compute Cloud)

Amazon EC2 offers complete control over your compute resources by letting you manually launch and configure virtual machines. You can choose the instance types, manage underlying servers, scale clusters and install software all on your own. While it is highly flexible, it also comes with high operational overhead.

Amazon Fargate eliminates the need to manage servers or optimize cluster packing manually. You can simply define your task sizes and the required task definition parameters. Then, Fargate takes care of the provisioning and scaling behind the scenes.

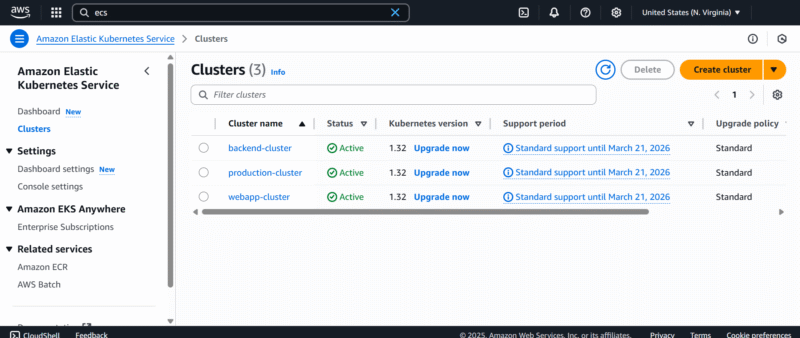

AWS Fargate vs EKS (Elastic Kubernetes Service)

at scale with full Kubernetes compatibility.

Amazon EKS is a managed Kubernetes service ideal for users who want to orchestrate multiple containers using Kubernetes tools. EKS requires users to deploy and manage EC2-based nodes, which involves choosing instance types, provisioning resources and securing Fargate pods or clusters.

When using Fargate with EKS, you can enable a serverless computing model inside Kubernetes itself. This allows you to run Fargate pods without configuring the underlying servers, giving you the benefits of Kubernetes without the management hassle.

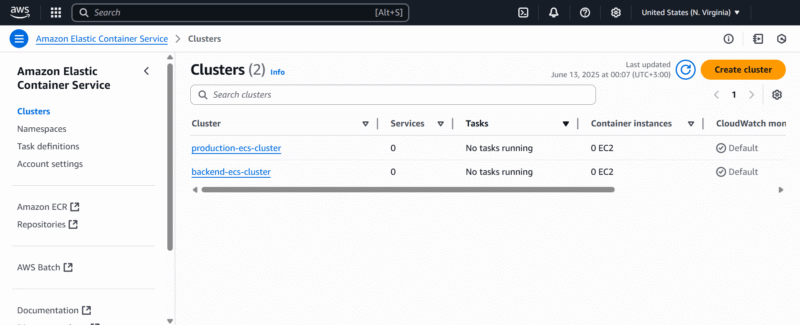

AWS Fargate vs Amazon ECS (Elastic Container Service)

Amazon ECS supports two approaches for its workloads: the EC2 launch and the AWS Fargate launch. The ECS launch type with EC2 provides more configuration options, but it requires manual setup for your cluster and instance lifecycle.

With the Fargate launch type, AWS Fargate provisions the compute, manages task sizes and auto-scales the resources based on your CPU and memory requirements.

AWS Lambda vs Fargate

in response to triggers.

AWS Lambda is ideal for event-driven tasks that run for a maximum of 15 minutes. Lambda functions run in response to triggers, such as HTTP requests or file uploads. It is perfect for microservices or automation scripts that run for short periods of time.

Lambda has limitations when dealing with multiple containers, large CPU and memory needs, operations running longer than 15 minutes, and complex runtime environments. This is where Fargate comes into play — it supports users with container deployments in complex environments.

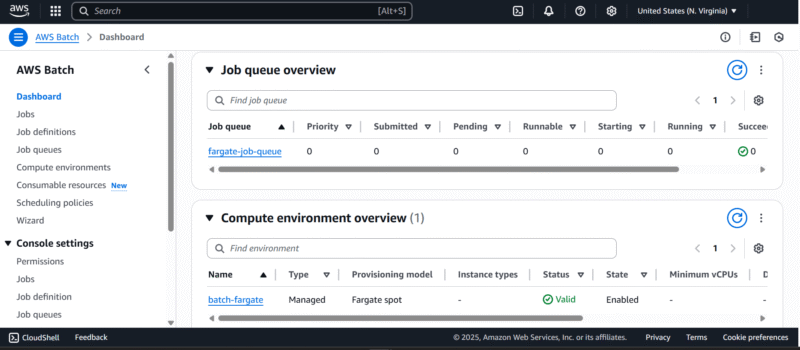

AWS Batch vs Fargate

AWS Batch is a serverless service that enables batch processing of workloads across EC2 instances, spot instances or Fargate. It is tailored for compute-intensive tasks, such as scientific simulations.

Initially, AWS Batch workloads were managed manually using EC2. However, the Fargate launch type eliminates the need to manage compute environments, making it easier to process jobs.

AWS Fargate Benefits

AWS Fargate offers a modern and efficient approach to container management. It enables developers and DevOps teams to focus more on their applications and less on infrastructure management. The following are the key benefits that make Fargate a game changer for modern cloud workloads.

- No server management: Fargate removes the need to provision, patch or scale underlying servers. You simply define your application’s requirements in a task definition, and Fargate automatically adjusts the infrastructure based on the CPU and memory requirements.

- Economical pay-as-you-go pricing: You are billed only for the CPU and memory that your application uses during the runtime of your Fargate tasks. This means you don’t have to overprovision compute capacity or pay for idle resources.

- Auto-scaling: AWS auto-scales Fargate tasks to ensure that the application meets demand without manual intervention. When traffic spikes, Fargate automatically provisions more Fargate nodes. When demand drops, it scales down to save on costs.

- High availability and reliability: AWS Fargate automatically distributes Fargate tasks across multiple availability zones within a region, ensuring resilience against infrastructure failure. When integrated with elastic load balancing, your applications can distribute traffic evenly across healthy tasks, which reduces downtime and improves performance under varying load conditions.

- Enhanced security by default: Each Fargate task runs in its own isolated environment, limiting the potential attack surface. AWS Fargate supports security groups, NACLs and AWS identity policies to secure inbound and outbound traffic.

AWS Fargate Drawbacks & Limitations

It is important to understand Fargate’s limitations to determine if it’s the right fit for your workloads. Here are some notable drawbacks and limitations of AWS Fargate.

- Limited customization and control: Since Fargate eliminates the need to manage underlying servers, it also removes your ability to customize the compute resources or fine-tune system configurations. You can’t install custom kernel modules, tweak OS-level settings or use special monitoring agents that depend on host-level access.

- Higher cost for long-running workloads: Fargate is cost-effective for short-lived or intermittent workloads. It can get more expensive than EC2 for long-running tasks or high-throughput services. The abstraction layer, convenience and automation come at a price. For always-on services, dedicated virtual machines are more economical.

- Lack of persistent storage: Fargate uses an ephemeral storage model. Temporary storage is allocated for each task, which is wiped clean when the task stops. This makes Fargate unsuitable for stateful applications, like databases or file processing systems. You can now integrate Fargate with Elastic File System (EFS) for persistent shared file storage, but this introduces additional complexity, latency and cost.

- Strict task-sizing constraints: AWS Fargate requires users to define task sizes based on fixed combinations of CPU and memory requirements, which can be restrictive. You can’t freely mix and match resources beyond what Fargate supports, and this may lead to overprovisioning or underprovisioning.

- Networking and performance constraints: Fargate’s networking model has many limitations that can impact performance. It lacks multicast/broadcast support, which complicates legacy or clustered applications. Additionally, it has fixed network bandwidths per task, potentially causing bottlenecks for data-intensive workloads. Fargate also has slower intercontainer communication compared to EC2’s host mode networking.

AWS Fargate Pricing

AWS Fargate uses a pay-as-you-go pricing structure. You are billed based on the exact amount of CPU and memory resources that your running Fargate tasks use. When you define your task, you specify its size by selecting the compute and memory required for your container. AWS then charges based on the amount and duration of your resource usage.

Fargate has two cost-saving options to optimize spending: Fargate Spot and the Savings Plans. Fargate Spot offers discounts of up to 70%, and it’s good for fault-tolerant workloads like testing and data processing. The Savings Plans provide reduced rates in exchange for committing to steady usage over one or three years.

What’s more, new AWS users will also benefit from the free tier. This offer includes 750 hours of Fargate tasks per month — using 0.25 vCPU and 0.5GB of RAM per task — for the first year.

Additionally, there are optional costs depending on your task configurations. Certain services may incur extra charges. Examples include launch types that use data transfers or pull container images from Amazon ECR, as well as those that integrate with elastic load balancing, Amazon CloudWatch Container Insights or Amazon EFS for persistent storage.

Learn more about AWS pricing in our AWS cost optimization guide and our AWS pricing guide.

Final Thoughts

AWS Fargate is a modern, serverless solution to run containerized applications. It abstracts the architecture and lets developers focus on application logic rather than provisioning infrastructure, scaling compute resources or patching systems. It integrates with AWS tools and services to provide a highly secure, cost-efficient and scalable environment.

Thank you for taking the time to explore AWS Fargate with us. Are you currently using containers in your architecture? What challenges are you facing with container management or workload scaling? Let us know in the comments section below. We would love to hear your thoughts.

FAQ: What Is AWS Fargate Used For?

AWS Fargate is a serverless compute engine that allows you to run containers without managing the underlying servers. It simplifies container management by automatically handling the provisioning, scaling and infrastructure.

EC2 requires manual server management and gives you complete control over virtual machines. AWS Fargate eliminates the need to manage servers by running containers on demand, trading control for simplicity.

AWS ECS is a container orchestration service that supports EC2’s self-managed servers and Fargate’s serverless deployments. With Fargate, you don’t have to manage the underlying nodes.

Lambda runs short-lived and event-driven functions with maximum durations of 15 minutes, while Fargate runs long-lived containers with no time limits.