ChatGPT Health’s Launches Amid Privacy, Security and Accuracy Concerns

This week ChatGPT announced the beta launch of ChatGPT Health, a new tab that will allow users to ask health and wellness questions, and centralize medical records.

According to ChatGPT’s announcement about the Health tab, “over 230 million people globally ask health and wellness related questions on ChatGPT every week,” making health “one of the most common ways people use ChatGPT today.”

Key features of ChatGPT Health include the ability for users to connect medical records and wellness apps, such as Apple Health, Function and MyFitnessPal — though medical record integrations and some apps are available only in the U.S.

ChatGPT Health is in beta, though Free, Go, Plus and Pro users can sign up for the waitlist, though the company plans to expand access to all web and iOS users in the coming weeks. ChatGPT Health is not available in the U.K., EEA or Switzerland, and the feature for saving medical records is available for only U.S. users.

How ChatGPT Health Works

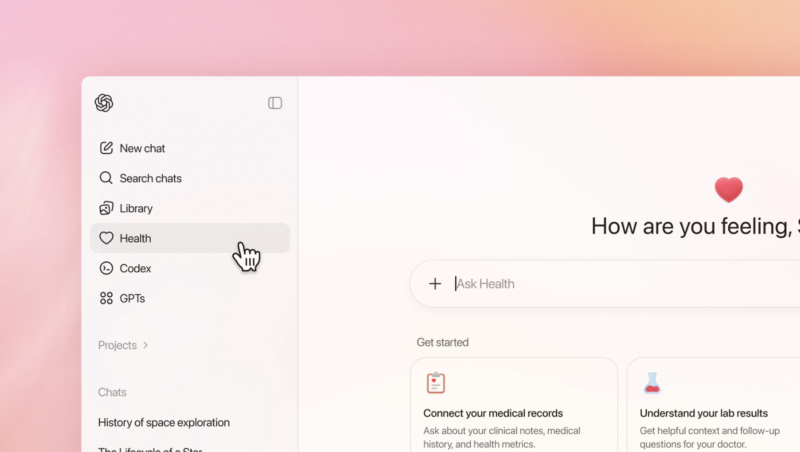

ChatGPT Health users can find the tool under the “Health” tab in the lefthand menu.

ChatGPT Health is not intended to replace medical or to provide diagnosis or treatment. “ChatGPT can help you understand recent test results, prepare for appointments with your doctor, get advice on how to approach your diet and workout routine, or understand the tradeoffs of different insurance options based on your healthcare patterns,” the announcement explained.

Health conversations won’t be used for model training, and information sharing between ChatGPT and ChatGPT health will be one-way, allowing Health to have “separate memories” and reference your saved background information — but not sharing health information in the other direction.

Additionally, if users start a conversation in ChatGPT about health-related topics, it will suggest moving it into Health for its additional security and privacy. Users can also view or delete “memories” within Health or via the Setting’s “personalization” section.

Security and Privacy Concerns

ChatGPT Health will also provide additional privacy and security through “purpose-built encryption and isolation to keep health conversations protected and compartmentalized,” according to ChatGPT’s announcement.

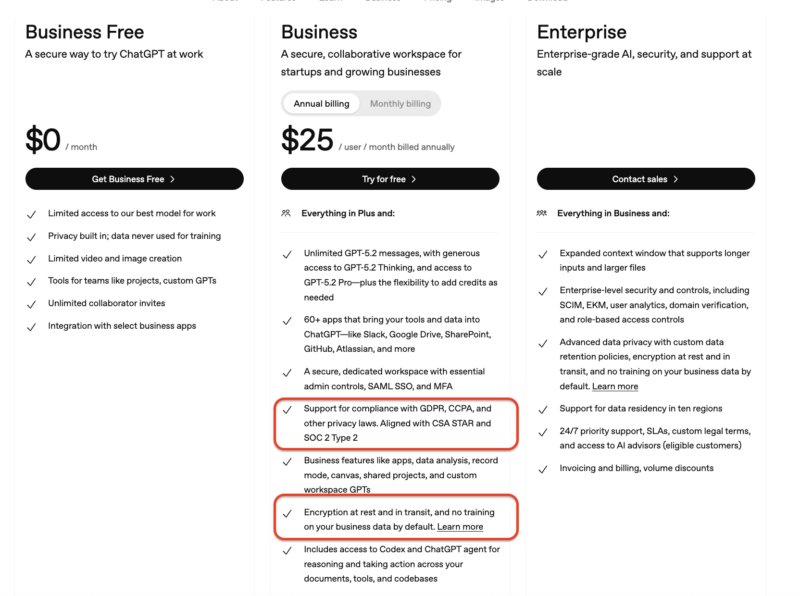

According to ChatGPT’s current plan descriptions, encryption at rest and in transit is available for Business or Enterprise users, but not for any other lower plans.

It’s unclear if ChatGPT Health will offer the same protections on Business or Enterprise — encrypted at rest with AES-256 and in transit with TLS1.2+ — or offer additional security.

The Health announcement says that “conversations and files across ChatGPT are encrypted by default at rest and in transit as part of our core security architecture. Due to the sensitive nature of health data, Health builds on this foundation with additional, layered protections—including purpose-built encryption and isolation—to keep health conversations protected and compartmentalized.”

Some experts remain concerned about safeguarding users’ health information, including Andrew Crawford, senior privacy counsel at the Center for Democracy and Technology in the U.S. “New AI health tools offer the promise of empowering patients and promoting better health outcomes, but health data is some of the most sensitive information people can share and it must be protected,” Crawford told the BBC.

In a separate statement emailed to CNET, Crawford explained that “The U.S. doesn’t have a general-purpose privacy law, and HIPAA only protects data held by certain people like health care providers and insurance companies.”

“The recent announcement by OpenAI introducing ChatGPT Health means that a number of companies not bound by HIPAA’s privacy protections will be collecting, sharing and using people’s health data. And since it’s up to each company to set the rules for how health data is collected, used, shared and stored, inadequate data protections and policies can put sensitive health information in real danger.”

Andrew Crawford, senior privacy counsel, the Center for Democracy and Technology

Health Hallucinations

Beyond the concerns surrounding ChatGPT Health users’ privacy and security, healthcare experts are concerned about users receiving inaccurate information from generative AI chatbots since they are prone to hallucinations — or generating false or misleading data — while also stating it in a confident and convincing way.

Reducing AI hallucinations is an important task for company programmers, but that might be impossible due to the nature of large language models providing predictive text; after analyzing massive amounts of data, the tools learn statistical patterns to predict the most probable word in the sentence sequence, rather than “knowing” right or wrong answers.

Feeding AI models ever increasing language data also means that the models might draw information from unreliable sources or outdated information.

An excellent recent example is of a 60-year-old man, seeking to reduce his sodium intake, took ChatGPT’s advice to swap salt for sodium bromide — and ended up in a hospital’s psychiatric ward for three weeks.

“Hallucinations are not inherently more prevalent in reasoning models, though we are actively working to reduce the higher rates of hallucination we saw in o3 and o4-mini,” an OpenAI spokesperson said to NewScientist. “We’ll continue our research on hallucinations across all models to improve accuracy and reliability.”

OpenAI’s privacy policy openly acknowledges the predictive element of the models, as well as the potential for inaccuracies. Under the “Your Rights” section, it says: “Services like ChatGPT generate responses by reading a user’s request and, in response, predicting the words most likely to appear next. In some cases, the words most likely to appear next may not be the most factually accurate. For this reason, you should not rely on the factual accuracy of output from our models.”

My Take

Yes, there are serious concerns about chat users adding sensitive health information to the tool while there’s such a mismatch of privacy and security expectations across geography and industry. However, right now, 230 million people each week are using ChatGPT for health advice. If ChatGPT can improve the security and privacy of users by adding additional encryption and compartmentalization, then it’s a step in the right direction for protecting users.

However, ChatGPT Health users should understand that the tool isn’t under HIPAA regulation, and any security protections aren’t foolproof. Additionally, if users receive medical advice, they should be warned within the tool about potential inaccuracies and hallucinations, and to check with a health professional about the advice.

If you want to receive more news directly to your inbox, be sure to sign up for our newsletter below.