Every Major AI Company Is Getting Sued!

This Deep Dive was originally sent on July 30th, 2025.

Alright, grab a cup of coffee, because we need to talk about what’s brewing in the AI industry! It’s been a pretty wild ride for nearly three years, with a flurry of lawsuits making their way through the courts.

We’re seeing tech giants like OpenAI, Meta, Google, Anthropic, and Stability AI facing off against programmers, authors, artists, and even major media organizations.

The core issue here? Their right to train AI models on copyrighted content without permission or payment.

Things just heated up significantly. In June 2025, judges actually sided with both Anthropic and Meta in some landmark copyright cases. But, almost in the same breath, new lawsuits emerged that very month from big names like Disney, Universal, and even Reddit. Talk about a dynamic situation.

Meanwhile, in a move that could reshape the game entirely, Cloudflare just dropped a big one: They’re now blocking AI crawlers by default and introducing a “pay-per-crawl” model.

This means the days of AI companies freely accessing training data could be behind us, fundamentally changing how data is acquired. It’s a fascinating development, especially when you consider how crucial data and context are for AI models.

At its heart, this isn’t just legal maneuvering. This is about who really controls the future of AI and, for those of us creating content, whether our creative work retains its value in this rapidly evolving landscape.

It’s certainly a lot to keep an eye on, but we’ve broken things down to help you understand the landscape and stay ahead of the curve in this wild tech journey.

Let’s dive in.

What’s Going On?

The lawsuits fall into distinct battlegrounds, each targeting different aspects of how AI companies operate:

- Code Generation Under Fire: GitHub Copilot faces allegations of copying and republishing open-source code without proper attribution or license compliance, raising fundamental questions about how AI handles existing intellectual property frameworks.

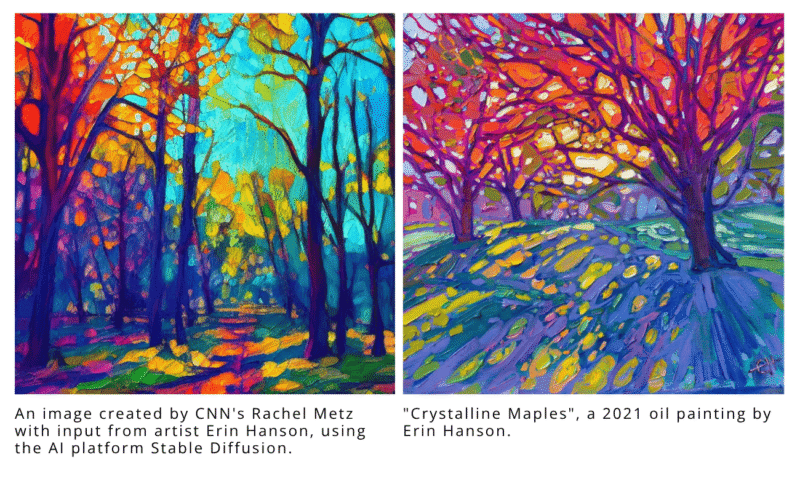

- Visual Artists Mobilize: Artists like Sarah Andersen, Kelly McKernan, and Karla Ortiz argue that AI image generators essentially function as “high-tech collage tools,” scraping billions of copyrighted images to train systems that can then reproduce their specific artistic styles without consent or compensation.

- Authors and Publishers Unite: Over 8,500 authors signed the Authors Guild letter demanding AI companies stop using their works without permission—including high-profile writers like Margaret Atwood, Dan Brown, and Jodi Picoult. Individual lawsuits involve prominent authors like Sarah Silverman, George R.R. Martin, Ta-Nehisi Coates, Michael Chabon, and Junot Díaz, with cases claiming systems like ChatGPT were trained on books obtained from “shadow libraries”—essentially pirated content repositories.

- Media Industry Revolt: News organizations argue that AI systems not only steal their content but actively undermine their survival. The New York Times claims OpenAI’s models “mimic the Times’ style and recite its content verbatim,” while competing as a source of reliable information and diverting traffic that would otherwise generate advertising revenue. Following the Times’ lead, eight major newspapers—including the Chicago Tribune, Denver Post, New York Daily News, and Orlando Sentinel—sued OpenAI and Microsoft, while the Wall Street Journal and New York Post targeted Perplexity AI for similar violations.

- Entertainment Giants Strike Back: Sony Music Entertainment, Universal Music Group, and Warner Records are seeking up to $150,000 per copied work in their lawsuits against AI music generators Suno and Udio, alleging generated songs sound strikingly similar to copyrighted hits like Chuck Berry’s “Johnny B. Goode” and Mariah Carey’s “All I Want for Christmas is You.” Meanwhile, Disney and Universal have sued AI image generator Midjourney for creating unauthorized copies of their copyrighted works.

- Personal Data Harvesting: Platforms face lawsuits over using private messages and user-generated content for AI training without explicit consent, while court filings reveal some companies allegedly used pirated content with executive-level approval.

- Industry Practices Shifting: The legal pressure is already changing business practices, with companies like Cloudflare blocking AI crawlers by default and introducing “pay-per-crawl” pricing models that could fundamentally alter how AI companies access training data.

🔒This is where the free preview ends.

Join thousands of professionals who get the complete story

Our Deep Dive subscribers rely on these investigations to stay ahead of emerging threats and make informed decisions about technology, security, and privacy.

✅ Complete access to this investigation

✅ All future Deep Dive reports

✅ Searchable archive of past investigations

✅ No ads, no sponsored content

✅ Cancel anytime